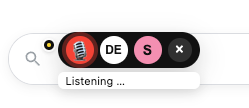

Extension icon → record

Tap the Whispererr icon in your browser toolbar and recording starts instantly. The icon badge flips to “REC” so you know it’s listening, and you can still use the dot inside inputs if you prefer.

Hold your middle mouse button, speak in your own language, and release. Whispererr listens, transcribes with Whisper, can instantly translate (e.g. German → English), sends it through generative AI, and types the result directly into any input field in your browser.

Currently supports macOS + Chrome/Brave and assumes you know how to plug in your own OpenAI token.

Live demo

This is the experience: middle-click, speak in your own language, let Whispererr translate and drop polished text exactly where you need it. Watch the overlay pop up, the translation toggle flip, and the result appear instantly in the input field.

The video is captured right from macOS Brave – no edits, no mockups. This is what you get when you install the extension and native helper with your own OpenAI key.

Whispererr is a browser extension that gives you voice-driven generative AI anywhere you can type. Middle-click in any text field, speak naturally, and Whispererr converts your speech into structured AI output: emails, tickets, docs, GitHub issues, answers, or even code.

Instead of juggling tabs, copy-paste, or separate prompt windows, you talk once and the text appears right where you need it. Your flow stays in the browser tab you’re in: Jira, Gmail, Notion, GitHub, ChatGPT – anywhere.

You don’t have to think like an AI whisperer to get value out of it either. Whispererr is built so you can just talk like a human, in your own language, and let the system do the prompt engineering, translation, and formatting behind the scenes.

A lightweight native host and the Whispererr browser extension listen for your middle-click in focused text fields. While you hold the button, Whispererr records audio, transcribes it via Whisper, wraps it in a smart prompt, and calls your preferred LLM.

The generated text is written straight into the input field you clicked on.

Whispererr eliminates the gap between “I know what I want to say” and “it’s written down – perfectly”. You stay in flow, speak your intent, and let AI handle the typing, formatting, and boilerplate.

Whether you’re a developer, founder, support agent or writer, it feels like adding a tiny assistant into your mouse: one that understands your language, your shortcuts, and your favourite tools.

Use the middle mouse button in any browser input field to start voice-to-AI instantly.

Dictate bug reports, commit messages, emails, or even code; Whispererr and your model do the typing.

Leverages Whisper-style speech recognition for fast, accurate transcripts as the base for AI.

Speak in your native language and let Whispererr translate it for you – for example, dictate in German, flip the language switch to EN, and export the final result in English.

Point Whispererr at your preferred AI backend and prompt presets – you stay in control of outputs and cost.

This is the “no shit, that’s cool” feature. You speak in your native language – for example German – and with a simple language switch Whispererr turns your words into fluent English you can drop into emails, tickets, docs, or code comments.

You stay in your language. Whispererr handles the translation and AI polish, then types the English result directly into whatever input field you were using.

Works everywhere

Whispererr hooks into any input field that your browser exposes, so even “exotic” apps like web.telegram get a native-feeling voice-to-AI upgrade. Middle-click, talk in your usual language, and watch the message box fill up without needing bots, slash commands, or weird integrations.

Need to message an English-speaking business partner? Keep speaking in your native language, flick the English output toggle, and Whispererr types a flawless Telegram message for you. No more noisy voice memos or typo-heavy texts—just clean, translated prose ready to send.

Google AI Studio + Whisper

Google AI Studio is fantastic for assembling Gemini-powered app logic, but its built-in voice capture can’t keep up with Whisper-level accuracy. Whispererr fixes that gap: the AI Enablement + AI Integration Brave extension lets you dictate whole rows of requirements, prompts, or copy, then pass the clean transcript straight into Gemini.

The same flow we show with Telegram on this page now lives inside AI Studio and our site: hold the trigger, speak in your native language, and Whispererr streams the best-in-class speech recognition output into Google’s UI so you can assemble generative AI apps without touching the keyboard.

You’re effectively pairing the market leader in language recognition (Whisper) with the market leader for building generative AI applications (Gemini in Google AI Studio) – a super combo that makes shipping voice-dictated AI products feel instant.

Why Whisper?

Whisper is the foundation that makes Whispererr possible. It’s OpenAI’s open speech-recognition model trained on hundreds of thousands of hours of multilingual audio. Instead of brittle command-style systems, it uses a massive transformer to understand natural speech in many languages, detect accents, and auto-translate when you need it.

Long story short: you benefit from world-class research without building your own speech stack – we just point Whispererr at Whisper and everything downstream gets higher quality input.

Encrypted HTTPS to OpenAI

Multilingual transformer brain

Context-aware translation

Runs in the same infra that powers ChatGPT voice.

Whispererr leans on OpenAI’s Whisper stack because it’s built like serious infrastructure, not a toy dictation widget. Audio is streamed over HTTPS straight to OpenAI’s encrypted endpoints, processed inside hardened data centers, and returned as text over the same secure channel. Nothing is stored inside the extension – once the transcription lands, the audio buffer is discarded.

Whisper itself is trained on massive multilingual corpora, so your translation isn’t a literal word swap: the model understands context, tone, and domain-specific phrasing before handing back the text that flows into your LLM.

The result: enterprise-grade voice capture with accurate translation that you can trust inside regulated workflows.

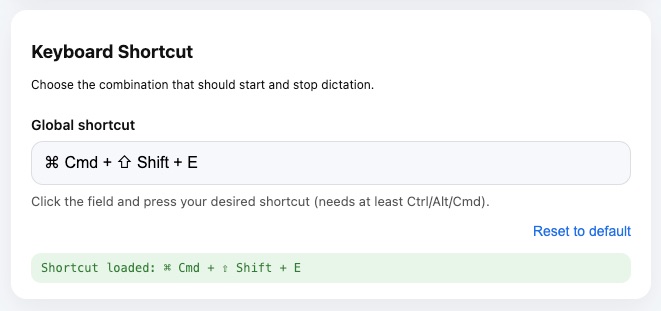

Whispererr comes with a couple of professional “in-the-weeds” controls. Click the toolbar icon to trigger recording immediately, or use the floating dot in each input. Prefer shortcuts? Map any key combo – even your middle mouse button with tools like Keyboard Maestro or BetterTouchTool – and fire dictation without touching the UI.

Tap the Whispererr icon in your browser toolbar and recording starts instantly. The icon badge flips to “REC” so you know it’s listening, and you can still use the dot inside inputs if you prefer.

Assign a global shortcut right in Whispererr or bridge your middle mouse button via Keyboard Maestro / BetterTouchTool / custom scripts. Hold that trigger, talk, and Whispererr handles the rest.

Whispererr is built for people who think faster than they can type. It keeps you in the tools you already use, but removes the slow part – turning half-formed ideas into clean, structured text.

Dictate commit messages, PR descriptions, GitHub issues, or “explain this code” prompts while your hands stay on the keyboard. Whispererr can translate from your native language, tighten up the wording, and post it straight into your dev tools.

Talk through what the customer is struggling with and let Whispererr draft a clear, empathetic reply in English. Use your own language to think and reason, then send polished responses that match your team’s tone of voice.

Brain-dump product ideas, meeting notes or rough outlines into any text field. Whispererr turns the ramble into structured specs, summaries, or first-draft copy that you can tweak instead of writing from scratch.

Whispererr isn’t a generic dictation toy. It’s for people who already live in their terminal, IDE, or browser dev tools, and want to move even faster: software engineers, cloud/SRE/DevOps folks, systems engineers, power users who tweak everything, and founders who ship their own product.

If you’re comfortable juggling tabs, APIs, and automation, Whispererr fits right in. If you’d rather have IT set it up for you, this probably isn’t your tool (yet).

It also assumes you can control your environment. Whispererr is engineered for focused makers working from a studio, home office, or any setup where talking to your computer is natural — not for open-plan floors where seventeen colleagues share the same desk pod. If you thrive in a quiet space, work remotely, and invest in good microphones and fast workflows, you’re exactly who we built it for.

In short: if you build or operate software and know how to grab an API token, Whispererr is for you.

Under the hood, Whispererr is a small set of sharp, opinionated features designed to kill busywork: speak once, get clean output, and keep your hands free for the parts that actually need your brain.

Hold the middle mouse button in any input field to start recording instantly. No extra windows, widgets, or overlays – just click, talk, release.

Speak in your native language – for example German – and let Whispererr translate and polish the result into clear English that’s ready to send.

Switch between “native language in, same language out” and German → English translation from the floating menu so you always dictate in whatever language feels natural.

Jira, Gmail, Notion, GitHub, helpdesk, your internal admin UI – if it has a text box in the browser, Whispererr can type into it.

A lightweight native host handles audio locally, sends just what’s needed to your models, and keeps the whole flow feeling instant.

Use your own OpenAI API key from the macOS Keychain and wire Whispererr into the models and prompts that fit your workflow and budget.

On the web you see this walkie-talkie feature from chatgpt.com every day: press the mic, talk, and a few seconds later perfectly readable text appears in the window. Not the usual clunky word-by-word transcription the other tools spit out, but true speech recognition — fast, clean, stable.

There’s just one catch: you only get that quality on chatgpt.com.

Everywhere else online — comments, forms, support tickets, email, admin interfaces — you’re stuck typing the old-fashioned way. So it was obvious: we needed a way to bring this high-end speech recognition to any input field across the entire internet. One button → mic opens → you talk → the text lands right where your cursor is. Done.

Sure, there was a Chrome extension that tried to do something similar, but of course it was 19 €/month, bloated UI, endless options. When the trial ended, the UI just faded to black. So we said: If the world won’t hand us a good tool, we’ll build it ourselves.

Not the old-school way either — we’re doing it 2025 style.

The software? Whispererr – VoiceToEverywhere.

The heart of the workflow starts inside Visual Studio Code with an extension called “Whisper Assistant” (not our Whispererr system, the stock extension). You hit record, speak freely in German, and the extension outputs clean English text:

Let’s say I don’t have a Brave Browser extension and imagine that you go to a field where you can enter something. And then the extension recognizes that you are in a field and then a small dot appears and you can click on the dot. And with the small dot, the dictation starts immediately, where you can dictate in OpenAI Whisper. When you are done dictating, you click on the dot again and then the whole thing is automatically inserted where your cursor is.

use the macOS Keychain for the OpenAI token: o-api

design proposal for the UI is attached.

(Original first prompt, btw! plus two UI concept screenshots.)

This is where the first piece of magic happens:

No structure. No analysis. No decomposition into components.

Just your words → dropped as a prompt in the editor. That’s the starting point.

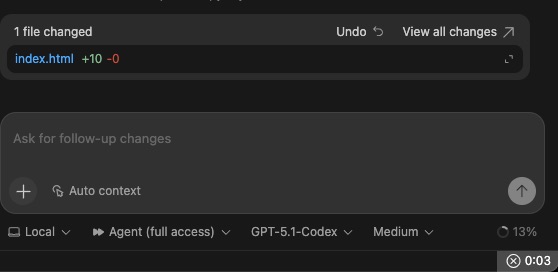

Then the machine that does the heavy lifting takes over: the Codex 5.1 extension inside VS Code. Codex reads the Whisper prompt and handles the entire technical implementation. Not “a little assist” — we’re talking full ownership:

Please also explore the rest of this site: we walk through how generative AI doesn’t just write code, it orchestrates the entire file and folder structure step by step.

Codex writes line after line like a developer deep in the tunnel.

The extension ships fully generated — without you typing a single character. After roughly 90 minutes (Brave made loading the extension a little… stubborn) the first version already did this:

Voilà — Whispererr – VoiceToEverywhere was born.

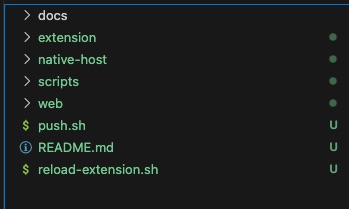

The web presence was dictated too — first in German, Codex answered in English. The machine generated the full microsite, static files,

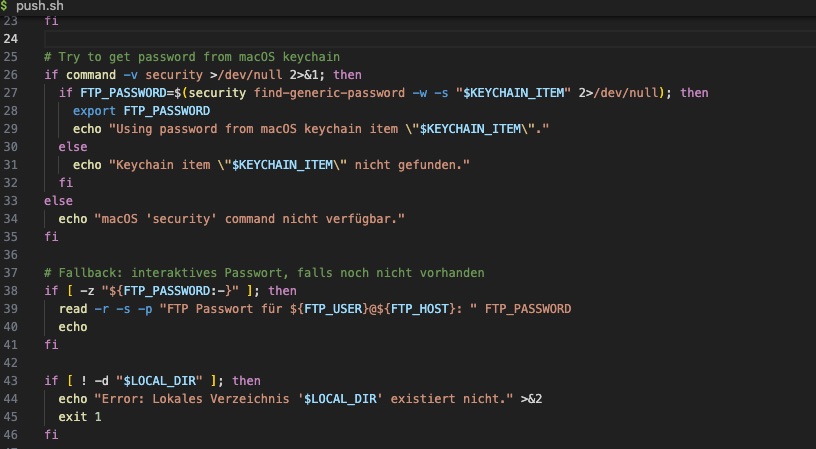

layout, styles, text, JS interactions, and finally a push.sh script for FTP uploads.

I created a folder named “web”; inside that folder we still need a small website, basically a product page that explains what the software does, how it works, what the advantages are — around 500 words. Please create an extra CSS file and an extra JavaScript file. Also make another folder called “images”; I’ll drop the assets in myself. Just start — language should be English.

please create a push.sh script for FTP upload with the following data: ...

Codex delivered:

In other words: every single piece you need for website deployment — generated.

Of course there are steps AI can’t automate because they require access to real systems (our site lives on a traditional hoster, not a hyperscaler API platform):

That’s classic infrastructure work. A human has to click those systems because AI can’t log into your hoster (again: hoster, not an

API-first hyperscaler). Once that’s done, the machine takes back over — push.sh handles deployment like we’re on a full CI/CD

stack.

A senior engineer plans, reviews, and signs off. The machine does the programming.

You define the requirement: what should happen, how should it behave, what should the UX feel like?

Whisper → turns speech into text. Codex → builds code, architecture, files, events, deployment. All generated, all end-to-end. No typing. No fiddling in an editor.

That’s not “AI integration”. That’s AI as the full software producer — you’re the architect, the machine is the crew on site. That’s exactly what 2025 calls an “AI application par excellence”. 🚀🔥

Whispererr is built out of a simple frustration: we think in paragraphs, but keyboards make us type in slow motion. With Whispererr, “coding”, “writing” and “explaining” become something you say – not something you grind out character by character.

Whispererr itself is just the tool. The only ongoing cost comes from your OpenAI usage – mainly the Whisper transcription API and the language model you plug in. The punchline: voice feels like a superpower, but the bill usually looks like loose change.

As of today, the OpenAI Whisper API is billed per minute of audio (around a few tenths of a Euro per hour), and the text model is billed per 1,000 tokens – a few cents for hundreds of messages. Light or heavy use, you only pay for what you actually dictate.

You add your own API key in the macOS Keychain, so you keep full control. If pricing changes, Whispererr automatically follows whatever your OpenAI account charges.

Say you go hard and dictate around 2 hours every workday – that’s roughly 50 hours per month. With current Whisper pricing (about 0.006 USD per minute), the math looks like:

In practice that’s in the ballpark of 15–25 € per month for intensive daily usage. Dictate less? Pay less.

If you’re paid even 30 € per hour, saving just 10 minutes per day easily covers the monthly API bill. Most people gain much more – especially if they spend hours writing tickets, emails, or documentation.

So you swap “hunting for letters on a keyboard” for talking in your own language, and the cost difference is tiny compared to the time you get back.

Stop pecking at a keyboard and start talking. Whispererr turns minutes of typing into seconds of speaking, so you can get back to building, shipping, and thinking instead of hunting for the right keys.

Draft the same email, ticket, or spec in a third of the time by speaking it once instead of hammering it out by hand.

Replace daily “writing chores” – status updates, summaries, replies – with a few minutes of voice and instant AI output.

Speak in your own language, get polished English in the same input field, and never bounce between tools or tabs.